Today, thanks to the power of the Web Audio API, you can create immersive audio experiences directly in the browser – there’s no need for any plug-ins here. Today I’d like to share with you what I’ve learned while building the audio engine of our Babylon.js open-source gaming engine.

By the end of this article, you’ll know how to build this kind of experience:

Usage: move the cubes with your mouse or touch near the center of the white circle to mix my music in this 3D experience using WebGL& Web Audio. View the source code.

Web Audio in a nutshell

Web Audio is the most advanced audio stack for the web.

[author_more]

From Learn Web Audio API

If you ever tried to do something else than streaming some sounds or music using the HTML5 audio element, you know how limited it was. Web Audio allows you to break all the barriers and provides you access to the complete stream and audio pipeline like in any modern native application. It works thanks to an audio routing graph made of audio nodes. This gives you precise control over time, filters, gains, analyzer, convolver and 3D spatialization.

It’s being widely supported now (Microsoft Edge, Chrome, Opera, Firefox, Safari, iOS, Android, FirefoxOS and Windows Mobile 10). In Edge (but I’m guessing in other browsers too), it’s been rendered in a separate thread than the main JS thread. This means that almost all the time, it will have few if no performance impact on your app or game. The codecs supported are at least WAV and MP3 by all browsers and some of them also support OGG. Edge even supports the multi-channel Dolby Digital Plus™ audio format!! You then need to pay attention to that to build a web app that will run everywhere and provides potentially multiple sources for all browsers.

Audio routing graph explained

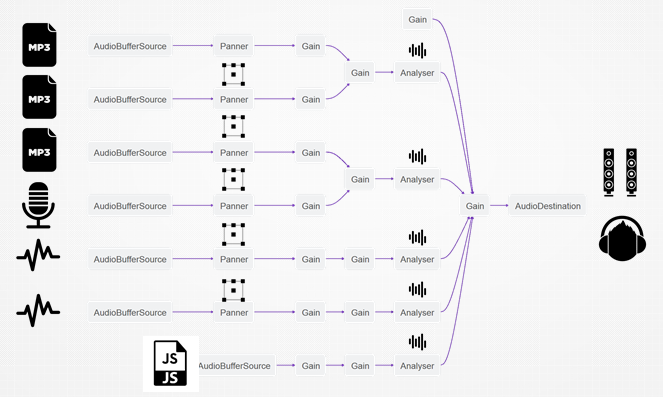

Note: this picture has been built using the graph displayed into the awesome Web Audio tab of the Firefox DevTools. I love this tool. 🙂 So much that I’ve planned to mimic it via a Vorlon.js plug-in. I’ve started working on it but it’s still very early draft.

Let’s have a look to this diagram. Every node can have something as an input and be connected to the input of another node. In this case, we can see that MP3 files act as the source of the AudioBufferSource node, connected to a Panner node (which provides spatialization effects), connected to Gain nodes (volume), connected to an Analyser node (to have access to frequencies of the sounds in real-time) finally connected to the AudioDestination node (your speakers). You see also you can control the volume (gain) of a specific sound or several of them at the same time. For instance, in this case, I’ve got a “global volume” being handled via the final gain node just before the destination and some kind of tracks’ volume with some gains node placed just before the analyser node.

Continue reading %Creating Fun and Immersive Audio Experiences with Web Audio%

Source: http://www.sitepoint.com/feed/